For the last couple of weeks, I’ve been enjoying a little time off, doing whatever comes to mind and trying not to work. Admittedly that last part may have failed a bit as the muscle memory of sitting down at my desk every day is hard to beat.

Nevertheless, I enjoyed working my way through the first module of the Machine Learning Specialization course from Andrew Ng, Geoff Ladwig, Aarti Bagul, and Eddy Shyu.

The first module introduces you to machine learning in general (supervised vs non-supervised, high-level terminology, cost functions, gradient descent), and helps you implement and apply algorithms to do Linear regression and Classification (in this case Logistic regression).

If this all sounds like hard silly math, it really isn’t but also definitely is 😅.

In short:

Linear regression is used to predict a certain value or find correlation. For example: Can we predict the temperature of the ocean based on the salinity of the water? (There’s an example of the code below)

Classification is used to put elements in distinct groups. For example: Is an email spam or not spam? Depending on your definition of spam the answer might always be ‘not spam’.

(When classification fails)

To apply my newly acquired knowledge I found a dataset of 60 years of oceanographic data and the challenge to predict water temperature based on the salinity, which is the amount of salt per liter. Not to be confused with sillynity, which is the amount of foolishness per liter.

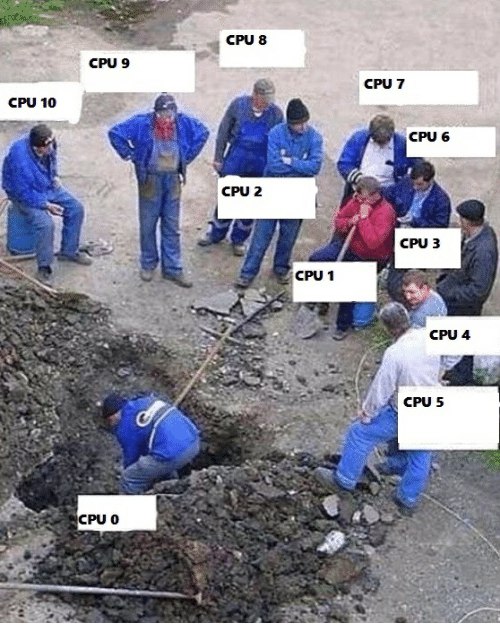

Initially, I took the step to re-use the algorithms I wrote in the course, and although that did work, they were unoptimized and therefore led to the following situation.

Luckily a lot of smart people wrote much better code and put it into a Python package called scipy.

1 | from sklearn.linear_model import SGDRegressor |

Results

1 | model parameters: w: [-2.2189843 -0.8562193], b:[10.61808813] |

In the end, you receive a weight for your formula (temperature = (x1 * w2) + (x2 * w2) + b)) which you can then use to predict the water temperature based on the salinity. I know right, who knew machine learning could be so useful and applicable to day-to-day life.

I was surprised that the scipy and my own results were not far apart, but far enough that it does matter, and someday I’m gonna try and figure out why! Someday…

All in all a great course, although the video format takes some getting used to and doesn’t fit my learning style 100%.

PS: None of this work was done by OpenAI GPT4, although it would have saved me days and days of work.